A CLUSTER composed of the European projects AEQUITAS, BIAS, FINDHR and MAMMOTH, funded through the Horizon Europe program. This network is a crucial component of the European Commission's strategy to ensure the trustworthiness of AI and it is focused on developing and deploying human-centric, sustainable, secure, inclusive, and trustworthy AI technology.

Mission

What is the cluster’s mission?

The AI Fairness Cluster brings together projects united by a shared mission: identifying and mitigating bias, and making sure AI systems contribute to diversity and inclusion.

Goals

What are the cluster goals?

members

Who are the cluster members?

AEQUITAS will develop a framework to address and tackle the multiple manifestations of bias and unfairness of AI by proposing a controlled experimentation environment for AI developers.

BIAS will empower the AI and Human Resources Management (HRM) communities by addressing and mitigating algorithmic biases.

FINDHR will facilitate the prevention, detection, and management of discrimination in algorithmic hiring and closely related areas involving human recommendation.

Highlights

Check our projects’ highlights

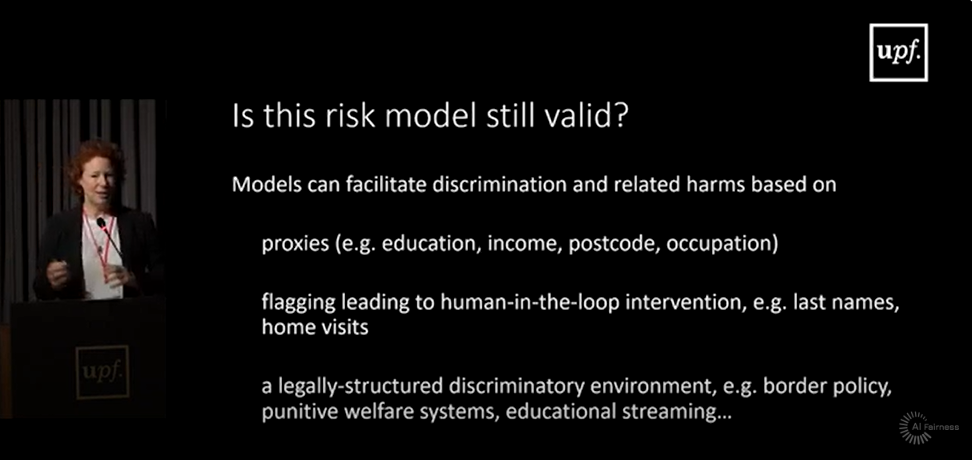

AI Fairness Cluster Conference & AIMMES Workshop 2025

Join the 2nd edition of the AI Fairness Cluster biggest event to explore cutting-edge solutions for addressing AI bias and ensuring equitable, transparent, and accountable technologies.

Shaping Responsible and Inclusive AI in Recruitment: A Capacity-Building Series

Join the BIAS Project to shape fair and ethical AI in the workplace! From February to December 2025, this free training program across Europe equips HR professionals, AI specialists, and NGOs with skills to address bias, ethics, and AI in HR. It features interactive case studies, self-reflection, and hands-on practice. Sessions are online, in-person, or blended, with a certificate upon completion. Learn more and register today!

BIAS Webinar | Intersectional Fairness in AI: Legal Frameworks, Tools, and Learning Opportunities

Join us online on Dec 10 for a workshop with BIAS, DIVERSIFAIR, and Trustworthy AI Helix to shape the future of fair, ethical AI in recruitment and workplace decisions.

AEQUITAS AI Fairness Event: Adressing Bias, Stereotypes, and Discrimination in AI systems

Join us on December 6, 2024, in Bologna, Italy, for the AI Fairness Conference, co-organized by the AEQUITAS and FAIR Projects, to engage with top experts as they address bias, stereotypes, and discrimination in AI through keynotes, panels, and networking.

BIAS Co-creation Workshop | Business Modelling for Trustworthy AI Recruitment

Join us for the BIAS online co-creation workshop, "Business Modelling for Trustworthy AI-Powered Recruitment Platforms," on October 29 from 10:00–12:00 CET. This interactive event brings together diverse participants to collaboratively develop a SWOT analysis, a crucial element for a sustainable AI recruitment business model. The workshop will cover topics like the EU AI Act and include breakout sessions on stakeholder mapping and SWOT analysis. Hosted online via MS Teams.

AIMMES 2024 Proceedings

The proceedings from the 1st Workshop on AI Bias: Measurements, Mitigation, Explanation Strategies, co-located with the AI Fairness Cluster Inaugural Conference 2024 in Amsterdam on March 20, 2024, are now available! Check them out today.

BIAS Data Donation Campaign

Champion diversity and innovation in your company: make a difference with your cover letters and CVs! Until 31 January 2025, share anonymized cover letter and CV samples and become our next case study.

AI Fairness Cluster Inaugural Conference & AIMMES'24

The AI Fairness Cluster Inaugural Conference on March 19, 2024, in Amsterdam, brings together experts and researchers from 50+ institutions to address discrimination risks in AI, featuring keynotes, project presentations, and panels, while the co-located AIMMES'24 workshop on March 20 explores technical aspects of measuring, mitigating, and explaining AI biases.

AI Fairness Cluster Flyer

Explore the captivating world of the AI Fairness Cluster through our flyer. Uncover the mission, goals, and vibrant community of members shaping the future of artificial intelligence. Dive into a journey of discovery!

BIAS Trustworthy AI Helix

Join the Trustworthy AI Helix within the BIAS community for project updates and collaboration with experts in Trustworthy AI. This community is essential for disseminating and exploiting BIAS project results, creating a robust and self-sustaining network. External stakeholders are invited to join and contribute to this thriving community.

MAMMOth's FairBench library

ΜΑΜΜΟth's FairBench Python library offers comprehensive AI fairness exploration, generating reports, stamps, and supporting multivalue multiattribute assessments. It seamlessly integrates with numpy, pandas, tensorflow, and pytorch for efficient ML exploration.

BIAS National Labs

BIAS is creating stakeholder communities in two key ecosystems: one focused on HR and recruitment policies, and the other comprising AI experts. Participants will be engaged in various BIAS activities, have access to personalized project information, and play an active role. If interested, please apply to join this initiative.

MAMMOth's FLAC approach for fairness-aware representation learning

MAMMOth's FLAC minimizes mutual information between model features and a protected attribute, using a sampling strategy to highlight underrepresented samples. It frames fair representation learning as a probability matching problem with a bias-capturing classifier, theoretically ensuring independence from protected attributes.